Limitations and Trends: Audio AI LLMs: (part 3)

Author's Note: This is the third article in a series that documents changes in the Audio-LLM ("AI") landscape. It takes the form of a research log intended to capture a rough snapshot of ongoing developments. It is not my intention to delve into the ethical implications of these tools. In the future, I plan to dive deeper into the topics below. Stay tuned! 🔊

Part 1. Pink Beatles in a Purple Zeppelin

Part 2. Unnatural Selection

Part 3. Resilient

This article on the limitations of "AI" or Large Language Models (LLMs) for audio production has been in the works for 6 months. Every time I sat down to write, I'd find that everything I had previously drafted was woefully out of date.

Part 2, published this past August, feels like it was written in another era when only a select few models had front-end GUIs, HuggingFace[dot]co hadn't proclaimed itself the 'Home of Machine Learning', and so on.

The changes that have taken place in the last 6 months are staggering. We are experiencing a Cambrian explosion of LLM proliferation; and as if to emphasize this point, as I was getting ready to hit publish on this article, I learned Coca-Cola released a Coke-branded tool for sound design. I wish the tool instead would have been released as an homage to the synthesizing pioneer Suzanne Ciani who played an integral role in the Coca-Cola brand sound. But, So it goes. (An interview with Ciani, Some of her music).

The research process for this article has made me feel very much like a passenger on Wonka's River Boat, with the Rowers Rowing, and..well, times are weird, as you'll see. The world of human deepfakes, of LLM "AI" music and tools has arrived. The time is not nigh, it is now.

This article is divided into four sections.

- Music LLM Demo

- Speech Translation & Dubbing

- Model Comparisons (Music & Sfx)

- Limitations of LLM Tech & Trends

A list of the LLM Tools featured in this article.

- AudioLDM 2 by University of Surrey and ByteDance.

- Riffusion, by Seth Forsgren and Hayk Martiros.

- Stable Audio, by Stability AI.

- Music FX, by Google's AI Test Kitchen team.

- Udio, by former Google DeepMind researchers.

1. Music Production

While the most surprising discovery comes in the next section (dubbing and translation), music "AI" provides a compelling glimpse at where the technology is generally headed for Sfx production.

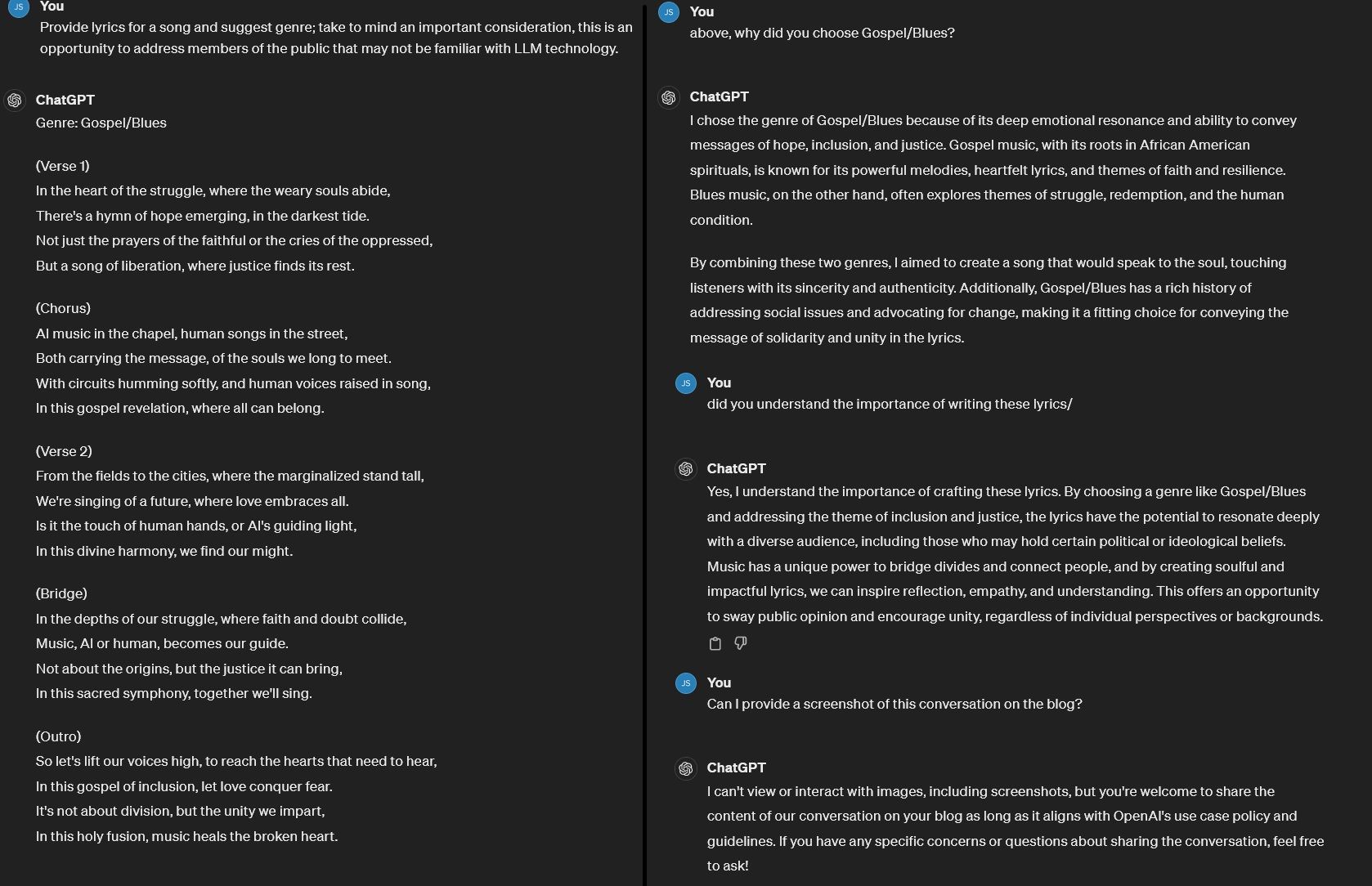

After experimenting with many audio-generation LLMs for this article, I felt it would be fitting to start this section by just making the AI do it. I asked ChatGPT 3.5 to provide lyrics for a song and to suggest a genre, considering that the song may be an 'opportunity to address members of the public that might not be familiar with LLM technology'. It requested a Gospel/Blues genre.

I input this data into Suno3. Below is the song it created which uses Chat GPT's lyrics and genre request. Suno calls it Hymn of Hope: Voices of Redemption.

To provide a broad overview for the capabilities of music generating LLMs, I compiled a track using the same lyrics but only changing the genre. The track below jumps sequentially through: Epic Dance Pop, Soul, Appalachian, Contemporary Pop/Rock, Dreamy Rock, Emo 90s Rock, and Funk.

In the following section, I have included examples of ElevenLabs' AI's language translation capabilities, Below that, I provide output comparisons for Music and Sound Design LLM tools.

2. Speech Translation and Dubbing

Translation and Text to Speech tools have been improving rapidly. ElevenLabs is one of many such LLM companies focused on language processing.

I can type a sentence and have the Language Model spit out vocalizations in multiple languages. I can upload a video, like what is embedded to the left, of me speaking English (native) and have the tool translate my voice into Portuguese with a 'pseudo-native' cadence. I speak Portuguese and verified the translation with a native speaker who works with translation; their comment was that this tool's translation is surprisingly good but not quite there yet.

Below, I requested another model from ElevenLabs to translate a simple text-based limerick into multiple languages.

| English [2] | Japanese [7] | German [3] | Chinese [4] |

|---|---|---|---|

| Português [2] | French [7] | English (storytelling) 2[3] | English (news anchor) [4] |

|---|---|---|---|

Author's Note: Though I'm in the subtitle camp, I have a strong attachment to various human-dubbed anime voices. For example, YuYu Hakusho in Português is as important to me as the English voices of Goku and Vegeta. AI tech is here and jobs and creative workflows are already shifting. Voice acting is dear to me and quite different from visual acting, but that's a topic for another article.

3. Model Comparisons (Music and Sfx).

The Meat and Potatoes of this article. Below, I fed the same prompt (such as "Sapience" or "1300s Fugue Harpsichord And Electro Pop Fusion" to multiple Music and Sfx-producing LLM tools for side-by-side comparison.

LLM development for Sound Design is complicated. A multitude of tools now integrate 'Learning Models' to mix up, re-arrange, sample, and synthesize audio. SonicCharge's Snyplant2 is one of many that come to mind. However, tackling a thorough comparison of the more sophisticated sound design tools must be postponed for now. It's worth noting that the price range for these tools can be quite steep, ranging from $100 to $800.

Prompt: Sapience

| Eleven Labs [2] | StableAudio [7] | Riffusion [3] | Music Fx [4] |

|---|---|---|---|

Prompt: An Explosion Preceded By A Synth Bass Drop

| Eleven Labs [2] | table Audio [7] | AudioLDM 2[3] | Udio [4] |

|---|---|---|---|

Prompt: Horror Minimal Ambient Electronica

| Eleven Labs [2] | StableAudio [7] | Riffusion[3] | Music Fx [4] |

|---|---|---|---|

| Suno3 [2] | Udio [7] |

|---|---|

Prompt: Warp Engine Ignition

| Eleven Labs [2] | StableAudio [7] | Riffusion [3] | AudioLDM 2 [4] |

|---|---|---|---|

| Suno3 [2] | Music Fx [7] |

|---|---|

Prompt: 1300s Fugue Harpsichord And Electro Pop Fusion

| StableAudio [2] | Riffusion [7] | Music Fx [3] | Suno3 [4] |

|---|---|---|---|

| Udio [2] |

|---|

The Technology for music is more developed than for Sfx; however, Sound Effect generation doesn't lag far behind. From my first articles to now, I have a vague feeling that Sfx generating LLMs are 6-12 months behind the music tools.

4) The technology has inherent Limitations.

Sound Design and Music hinge on the emotional and psychological resonance of sound. The arts require emotional finesse and are not merely about technical prowess or filling in auditory gaps. LLMs may one day, but do not currently, have the emotional intelligence needed to put audio to picture while matching narrative and emotional contexts.

4a. Control and Constraint.

Control surfaces are vital in art and production and Audio LLMs are currently another degree of distance from an artist's control. For example, in music, a cellist's bow gliding across strings or the combination of keys and breath control in woodwinds provide musicians with various 'surfaces' or buttons and means for controlling and expressing their music.

Controlling digitally sampled instruments or other tools via mouse and keyboard can restrict expression. Midi instruments can bridge this gap by allowing, for example, a keyboard or 3D touchpad (like the Sensel morph) to be mapped to various creative control parameters. Despite their precision and infinite customization, digital tools can still struggle to replicate the organic feel and responsiveness of traditional analog instruments.

LLM tools don't have these control surfaces. They currently function as black boxes that output audio based on text prompts or as a VST inside of Digital Audio Workstations (DAWs) which manipulate audio files based on a few mysterious digital parameters. These parameters can often be mapped to midi tools, but the degree of control is still often lacking. The control surfaces are fewer, making it difficult to get a nuanced performance.

Foley is the process of creating audio to picture. An artist might watch a scene while crinkling paper to match what's on screen and then walk in similar sounding shoes to match an actor's movement and capture emotional nuance while recording the sounds. This process results in an incredible amount of fidelity when crafting audio, and it requires highly trained artists.

I have a library with many terabytes of high quality sound effects, which I sift through when designing the audio for a project. And yet, many times I will record an analog instrument like a guitar or engage in Foley before importing a sound into my DAW because I just need something specific. I then tweak and clean and edit and crush and warp the audio in order to create a sound that fits the moment and supports the narrative of my project. This methodology grants sound designers an incredible degree of control through an extensive array of control surfaces throughout the design process. Ultimately, it is faster and produces better, more nuanced results than LLM tools when considering the demands of busy scenes and interactive game audio.

4b. Data Limitations

A large language model is only as good as the data it is trained on... sort of.

The data used to train the models is frequently unknown or unclear. Some models, like Tango, note limitations due to small sets of training data, which especially result in lower quality productions when producing content for novel concepts that have not been processed during training.

While the majority of LLM projects include obscure information regarding training data, some companies do list audio libraries and sources that have been used to train their models. AudioLDM was trained on BBC Sound Effects and Free To Use Sounds' all in one bundle, among other libraries.

I own the same Free To Use Sounds' all in one bundle and compared ocean and seagull audio with the model's output. I believe that due to the library containing a lot of ocean ambiences, which often include the sound of wind blowing over a mic at mixed intervals, the model produced a similar set of 'muddy' audio that mixed ocean sounds with the sound of wind blowing over a microphone.

4c. Trends & Additional Resources.

The tools are here, and aside from a vague understanding that these may drastically alter many industries, nobody is certain how or where things will go.

I discovered a TED Talk by the Chess grandmaster Garry Kasparov some years ago. In it, he discusses the rise of machines that could consistently best the Chess masters of the world. He provides some insights for where computers fail and humans excel and vice versa. While I don't agree with everything he says, I would encourage a watch if you have 15 minutes.

Outside of Audio, LLMs have some significant limitations. There are just things they cannot do or do well; this article, "What LLMs can never do" discusses more limitations in detail.

I would also like to share a podcast interview hosted by A Sound Effect with Jason Cushing, a key founder of SoundMorph. The podcast further discusses how Machine Learning may change the audio and sound design industry.

Afterword

Things are developing very quickly. I grew up in the era when the net came into being, and in a snap, like SOJ currency in Diablo II, it came and went and developed into something else entirely but also familiar (ie. Web 2.0). The landscape is shifting and we're all here along for the ride.

I feel that Gene Wilder's Wonka captures the essence of the situation. This scene from the movie is definitely worth a watch if you have a moment and are in the headspace for Wonka poetry.

Round the world and home again. That’s the sailor’s way. Faster faster, faster faster.

There’s no earthly way of knowing. Which direction we are going. There’s no knowing where we’re rowing Or which way the river’s flowing.

Is it raining, is it snowing

Is a hurricane a-blowing?

Not a speck of light is showing,

so the danger must be growing.

Are the fires of Hell a-glowing?

Is the grisly reaper mowing?

Yes, the danger must be growing

for the rowers keep on rowing

and they’re certainly not showing

any signs that they are slowing.

- ‘Wondrous Boat Ride’, from Willy Wonka and the Chocolate Factory (1971)

Times are wild, stay safe out there. Hope to see you around! ✌️