Comparing AI LLM Audio models (part 2)

Part 1. Pink Beatles in a Purple Zeppelin

Part 2. Unnatural Selection

Part 3. Resilient

Citations & References included at the bottom of this article

In this series, I aim to assess the functional use of cutting-edge LLM tools or "AI" for Sound Design and Music Production. It is not my intention to delve into the ethical implications of these tools nor assign moral judgments to them.

Part 1. Provides an introduction for the series.

Part 2. Compares many prompts and popular models.

Part 3. Discusses model limitations and highlights to keep an eye on.

I enjoy pushing technology to its limit and examining fail points. What unfolds when you tell a human-voice-focused AI to create synthy explosions, or task a Music AI with producing the sound of a swamp-dwelling sasquatch in the woods? Let's discover the answers below.

Audio LLMs / AI are bounding forward along with movements scrutinizing their role in media development[1]. Mentioned in Part 1., my focus, with caution, is directed toward gaining a deeper understanding of these tools.

In this article, you will find a side-by-side comparison for many leading algorithms. Sound Design AI models are featured first, followed by Music Models.

- AudioLDM 2 (Text-to-Audio & Music & Speech), by University of Surrey and ByteDance.

- AudioCraftPlus (text-to-audio, based on AudioGen & others), by github user 'GrandaddyShmax' student team.

- Make An Audio (Text-to-audio) by Zhejiang and Peking University with ByteDance AI Lab.

- AudioGen and MusicGen, by FAIR team of Meta AI (Facebook).

- MusicLM ATK, by the Google research team.

- Riffusion, by Seth Forsgren and Hayk Martiros.

Additional information for these models is provided in part 3 of this series.

Default settings were used for all models. The only alterations involved a few music tracks and one AudioGen Sfx track where I added fade-ins and decreased the overall volume.

Some of the models featured in this article were trained for music production, others for language vocalization, and some purely for sound design. In this first set of comparisons, I had each model produce sound for the same prompt:

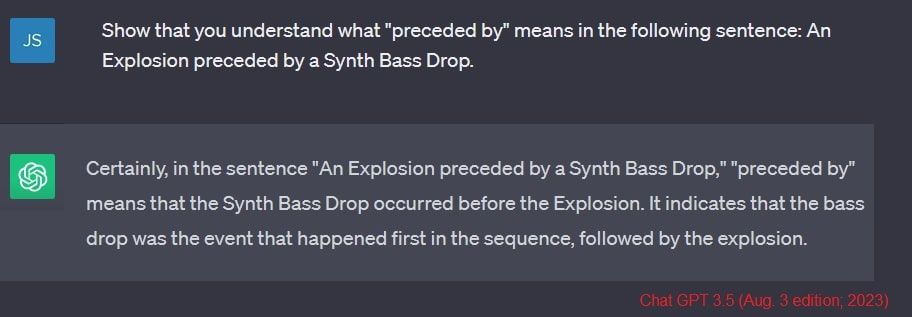

"An Explosion preceded by a Synth Bass Drop".

In addition to production quality, with this prompt I am interested in each model's understanding of syntax. Chat GPT 3.5 seems to understand what I am looking for:

Prompt (set A): An Explosion preceded by a Synth Bass drop

Note. Check your volume levels before listening.

| Tango [2] | AudioLDM 2 [3] | AudioGen [4] | AudioCraftPlus [5] | Bark [6] |

|---|---|---|---|---|

![Spectrogram for the audio produce by Tango for the promp []](https://ambientartstyles.com/content/images/size/w1000/2023/09/Tango---explosion-min.jpg)

|

![Spectrogram for the audio produce by [] from the prompt: 80s synth playing an arpeggio.](https://ambientartstyles.com/content/images/size/w1000/2023/09/audio-ldm2---explosion-min.jpg)

|

![Spectrogram for the audio produce by [] from the prompt: 80s synth playing an arpeggio.](https://ambientartstyles.com/content/images/size/w1000/2023/09/AudioGen---explosion-min.jpg)

|

![Spectrogram for the audio produce by [] from the prompt: 80s synth playing an arpeggio.](https://ambientartstyles.com/content/images/size/w1000/2023/09/audiocraftplus---explosion-min.jpg)

|

![Spectrogram for the audio produce by [] from the prompt: 80s synth playing an arpeggio.](https://ambientartstyles.com/content/images/size/w1000/2023/09/bark---explosion-min.jpg)

|

Prompt (set B): An Explosion preceded by a Synth Bass drop

| MakeAnAudio [7] | Chirp1 [8] | Chirp2 [9] | MusicLM ATK [10] | Riffusion [11] |

|---|---|---|---|---|

![Spectrogram for the audio produce by [] from the prompt: 80s synth playing an arpeggio.](https://ambientartstyles.com/content/images/size/w1000/2023/09/MakeAnAudio---explosion-min.jpg)

|

![Spectrogram for the audio produce by [] from the prompt: 80s synth playing an arpeggio.](https://ambientartstyles.com/content/images/size/w1000/2023/09/chirp1---explosion-min.jpg)

|

![Spectrogram for the audio produce by [] from the prompt: 80s synth playing an arpeggio.](https://ambientartstyles.com/content/images/size/w1000/2023/09/chirp2---explosion-min.jpg)

|

![Spectrogram for the audio produce by [] from the prompt: 80s synth playing an arpeggio.](https://ambientartstyles.com/content/images/size/w1000/2023/09/Google---explosion-min.jpg)

|

![Spectrogram for the audio produce by [] from the prompt: 80s synth playing an arpeggio.](https://ambientartstyles.com/content/images/size/w1000/2023/09/riffusion---explosion-min.jpg)

|

A & B - Quick Notes.

While all the Sound Effect oriented models produced audio with two phases, only Tango and MakeAnAudio organized them in the proper sequence. MakeAnAudio incorporated an unusual synthy ambiance before an explosion, while Tango included an unusual garble of sounds in its sequence. Chirp, an LLM designed for music production, was the only model to provide a true bass drop.

Overall, the explosions were somewhat bland; however the output from MakeAnAudio surprised me with a unique jumping-off-point just as the clip ends.

The clips that follow

are the product of submitting identical prompts to four leading Sfx models.

Prompt: Warp Engine Ignition

| Tango [2] | MakeAnAudio [7] | AudioLDM 2[3] | AudioGen [4] |

|---|---|---|---|

Prompt: Footsteps on Broken Glass

| Tango [2] | MakeAnAudio [7] | AudioLDM 2[3] | AudioGen [4] |

|---|---|---|---|

Prompt: Swamp Creature Sasquatch Howl

| Tango [2] | MakeAnAudio [7] | AudioLDM 2[3] | AudioGen [4] |

|---|---|---|---|

Prompt: Seagulls

| Tango [2] | MakeAnAudio [7] | AudioLDM 2[3] | AudioGen [4] |

|---|---|---|---|

Prompt: Horror Minimal Ambient Electronica

| Tango [2] | MakeAnAudio [7] | AudioLDM 2[3] | AudioGen [4] |

|---|---|---|---|

Brief Notes

The output from 'Seagulls' contains wind-like artifacts which highlights that the models are only as good as the data they are trained on - Noisy training-data, noisy outputs.

It is interesting to explore the diverse range of 'interpretations' with input terms like 'Horror Minimal Ambient Electronica' where things are vague and open to, well.. interpretation by an AI model.

Music!

Music production enjoys greater popularity and understandably receives significantly more attention, along with access to larger learning libraries, compared to sound effect design.

Nonetheless, I believe that the quality of Sound Design models is not far behind those designed for music. What gets wild is the next step some models are taking beyond basic audio production, a topic discussed in part 3.

Prompt: 1300s fugue harpsichord and electro pop fusion

| MusicLM ATK [2] | Chirp [7] | AudioLDM 2[3] | MusicGen [4] |

|---|---|---|---|

Prompt: Warp Engine Ignition

| MusicLM ATK [2] | Chirp [7] | AudioLDM 2[3] | MusicGen [4] |

|---|---|---|---|

Prompt: Classical guitar bolero dmnr

| MusicLM ATK [2] | Chirp [7] | AudioLDM 2[3] | MusicGen [4] |

|---|---|---|---|

Prompt: Creepy Horror Minimal Ambient Electronica

| MusicLM ATK [2] | Chirp [7] | AudioLDM 2[3] | MusicGen [4] |

|---|---|---|---|

Prompt: Epic Soundtrack Theme Action Intense

| MusicLM ATK [2] | Chirp [7] | AudioLDM 2[3] | MusicGen [4] |

|---|---|---|---|

Prompt: Sapience

| MusicLM ATK [2] | Chirp [7] | AudioLDM 2[3] | MusicGen [4] |

|---|---|---|---|

These outputs were captured over a couple day period, and during my revision process I found a few duplicate audio clips. I had submitted the same prompt to the same model multiple times and received very different audio outputs.

I went through each model to double check their output using identical prompts and found MusicGen's (Meta/Facebook) to be very inconsistent - each output varied drastically from one another. This was unusual because, if not identical, outputs from most models were consistent in style and composition.

These models have limitations. More details on these models and their limitations are also explored in Part 3.

To play you out: this is what a music model sounds like when told to make:

the sound of a swamp dwelling sasquatch.

Afterword

Images of the humans in Wall-E sometimes come to mind when I contemplate the LLM revolution. Mentioned in Part 1, I initially perceived this AI audio technology as a far-off fantastical concept, much like how I view the world of Wall-E.

If I were to leave a message for my future self, it would convey my hope that we continue to mindfully appreciate what we consume. Let's engage in extraordinary games and immerse ourselves in compelling content that challenges us. Let's keep challenging ourself. We got this!💪

Citations & References

Note. Some of these models share a primary publication, for additional information on each model see both references labeled 'a' and 'b', below.

Spectrograms were created using iZotope RX9.

[1] Maddaus, Gene. “SAG-AFTRA Seeks Approval for Second Strike Against Video Game Companies.” Variety (blog), September 1, 2023. https://variety.com/2023/biz/news/sag-aftra-seeks-video-game-strike-approval-1235711251/.

[2] Tango

[2a] Ghosal, Deepanway, Navonil Majumder, Ambuj Mehrish, and Soujanya Poria. DeCLaRe Lab, “Text-to-Audio Generation Using Instruction-Tuned LLM and Latent Diffusion Model.” arXiv, May 29, 2023. http://arxiv.org/abs/2304.13731.

[2b] “TANGO: Text to Audio Using iNstruction-Guided diffusiOn.” Python. 2023. Reprint, Deep Cognition and Language Research (DeCLaRe) Lab, September 10, 2023. https://github.com/declare-lab/tango.

[3]AudioLDM 2

[3a ] Liu, Haohe, Qiao Tian, Yi Yuan, Xubo Liu, Xinhao Mei, Qiuqiang Kong, Yuping Wang, Wenwu Wang, Yuxuan Wang, and Mark D. Plumbley. “AudioLDM 2: Learning Holistic Audio Generation with Self-Supervised Pretraining.” arXiv, August 10, 2023. https://doi.org/10.48550/arXiv.2308.05734.

[3b] 刘濠赫 Haohe (Leo) Liu /. “AudioLDM 2.” Python, September 10, 2023. https://github.com/haoheliu/AudioLDM2.

[4] AudioGen

[4a] Kreuk, Felix, Gabriel Synnaeve, Adam Polyak, Uriel Singer, Alexandre Défossez, Jade Copet, Devi Parikh, Yaniv Taigman, and Yossi Adi. “AudioGen: Textually Guided Audio Generation.” arXiv, March 5, 2023. https://doi.org/10.48550/arXiv.2209.15352.

[4b] GitHub. “Audiocraft/Docs/AUDIOGEN.Md at Main · Facebookresearch/Audiocraft.” Accessed September 10, 2023. https://github.com/facebookresearch/audiocraft/blob/main/docs/AUDIOGEN.md.

[5] Audio Craft Plus

[5a] Copet, Jade, Felix Kreuk, Itai Gat, Tal Remez, David Kant, Gabriel Synnaeve, Yossi Adi, and Alexandre Défossez. “Simple and Controllable Music Generation.” arXiv, June 8, 2023. http://arxiv.org/abs/2306.05284.

[5b] GrandaddyShmax. “AudioCraft Plus.” Python, September 9, 2023. https://github.com/GrandaddyShmax/audiocraft_plus.

[6] Bark

[6a] “🐶 Bark.” Jupyter Notebook. 2023. Reprint, Suno, September 10, 2023. https://github.com/suno-ai/bark.

[6b] Notion. “Notion – The All-in-One Workspace for Your Notes, Tasks, Wikis, and Databases.” Accessed September 10, 2023. https://www.notion.so.

[7] Make an audio

[7a] Huang, Rongjie, Jiawei Huang, Dongchao Yang, Yi Ren, Luping Liu, Mingze Li, Zhenhui Ye, Jinglin Liu, Xiang Yin, and Zhou Zhao. “Make-An-Audio: Text-To-Audio Generation with Prompt-Enhanced Diffusion Models.” arXiv, January 29, 2023. https://doi.org/10.48550/arXiv.2301.12661.

[7b] “GitHub - AIGC-Audio/AudioGPT: AudioGPT: Understanding and Generating Speech, Music, Sound, and Talking Head.” Accessed September 10, 2023. https://github.com/AIGC-Audio/AudioGPT.

[8] Chirp

[8a] “About Suno.” Accessed September 10, 2023. https://www.suno.ai/about.

[8b] “🐶 Bark.” Jupyter Notebook. 2023. Reprint, Suno, September 10, 2023. https://github.com/suno-ai/bark.

[9]MusicLM ATK

[9a] “MusicLM.” Accessed September 10, 2023. https://google-research.github.io/seanet/musiclm/examples/.

[9b] Agostinelli, Andrea, Timo I. Denk, Zalán Borsos, Jesse Engel, Mauro Verzetti, Antoine Caillon, Qingqing Huang, et al. “MusicLM: Generating Music From Text.” arXiv, January 26, 2023. https://doi.org/10.48550/arXiv.2301.11325.

[10] Riffusion

[10a] Martiros, Seth Forsgren and Hayk. “Riffusion.” Riffusion. Accessed September 10, 2023. http://www.riffusion.

[10b] GitHub. “Riffusion.” Accessed September 10, 2023. https://github.com/riffusion.

[11] MusicGen:

[11a] Copet, Jade, Felix Kreuk, Itai Gat, Tal Remez, David Kant, Gabriel Synnaeve, Yossi Adi, and Alexandre Défossez. “Simple and Controllable Music Generation.” arXiv, June 8, 2023. https://doi.org/10.48550/arXiv.2306.05284.

[11b] GitHub. “Audiocraft/Model_cards/MUSICGEN_MODEL_CARD.Md at Main · Facebookresearch/Audiocraft.” Accessed September 10, 2023. https://github.com/facebookresearch/audiocraft/blob/main/model_cards/MUSICGEN_MODEL_CARD.md.